| "Rules: Logic and Applications" 2nd Workshop, Dec, 2019 |

| Aesthetic Morphisms |

| Jocelyn Ireson-Paine |

| www.jocelyns-cartoons.uk/rules2019/ |

| "Rules: Logic and Applications" 2nd Workshop, Dec, 2019 |

| Aesthetic Morphisms |

| Jocelyn Ireson-Paine |

| www.jocelyns-cartoons.uk/rules2019/ |

| Slides as PDF | Artistic Techniques Database Demo | Artistic Techniques Database Video | Contact |

|

I'll now turn away from cataloguing aesthetic morphisms, to argue that we need high-level knowledge in artistic image processing. A morphism catalogue could help provide this. I'll begin my argument by looking at style transfer, and then discuss why current implementations — which lack high-level knowledge — are inadequate.

Style transfer is the generic term: rendering one artwork or photo in the style of another. DeepArt is a particular neural-net machine-learning algorithm for style transfer, based on a breakthrough by Leon Gatys, Alexander Ecker and Matthias Bethge. I shall argue that DeepArt and similar algorithms can't style-transfer from Cubism, because they can't understand the Cubists' intentions.

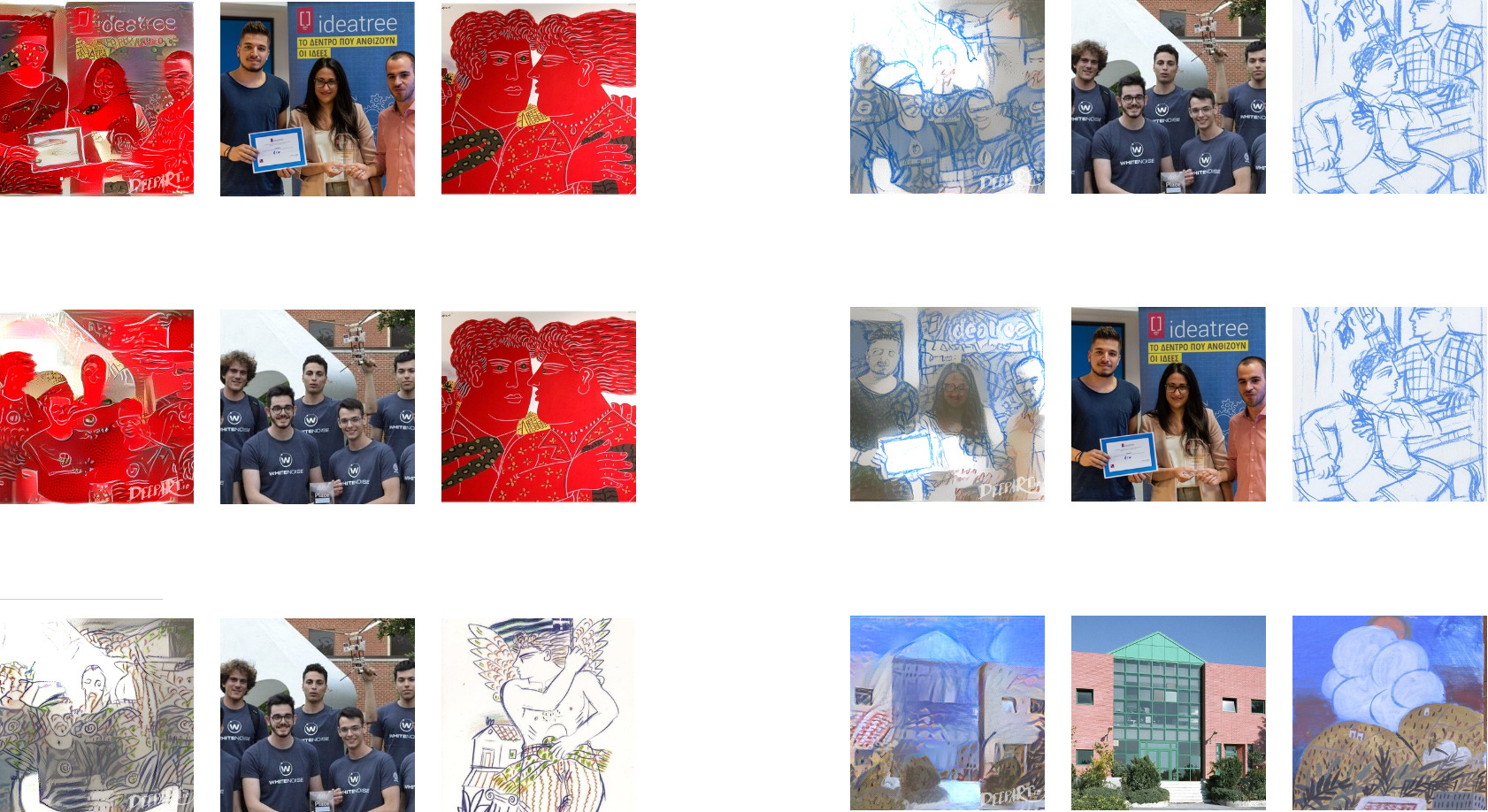

First, some examples of style transfer, run on Gatys, Ecker and Bethge's website deepart.io . This allows you to submit an image to be restyled, and another image to set the new style.

My experiments below use

the paintings of the Athenian artist

Alekos Fassianos to set a style,

and photographs from the National Technical

University of Athens, NTUA.

In each, the object image is

in the middle, the styling image on the right, and the result on the

left. The results are fun, but it's obvious

that DeepArt doesn't understand how to transfer from

Fassianos's stylised characters to the

real people in the photos. It hasn't even done

a very good job on the left wing of the NTUA building bottom right.

Notice that it's incorporated the red-stipple

pattern from Fassianos's foreground into the wall.

In this connection,

note Mark Liberman's post

"AI is brittle"

in the Language Log

blog. He is writing about speech-to-text

systems, but what he says is relevant

to style transfer too:

❝

Modern AI (almost) works because of machine learning techniques that find

patterns in training data, rather than relying on human programming of

explicit rules. A weakness of this approach has always been that

generalization to material different in any way from the training set can

be unpredictably poor. (Though of course rule- or constraint-based

approaches to AI generally never even got off the ground at all.)

"End-to-end" techniques, which eliminate human-defined layers like words,

so that speech-to-text systems learn to map directly between sound

waveforms and letter strings, are especially brittle.

❞